Top Papers of the week(Nov 20 - Nov 26)

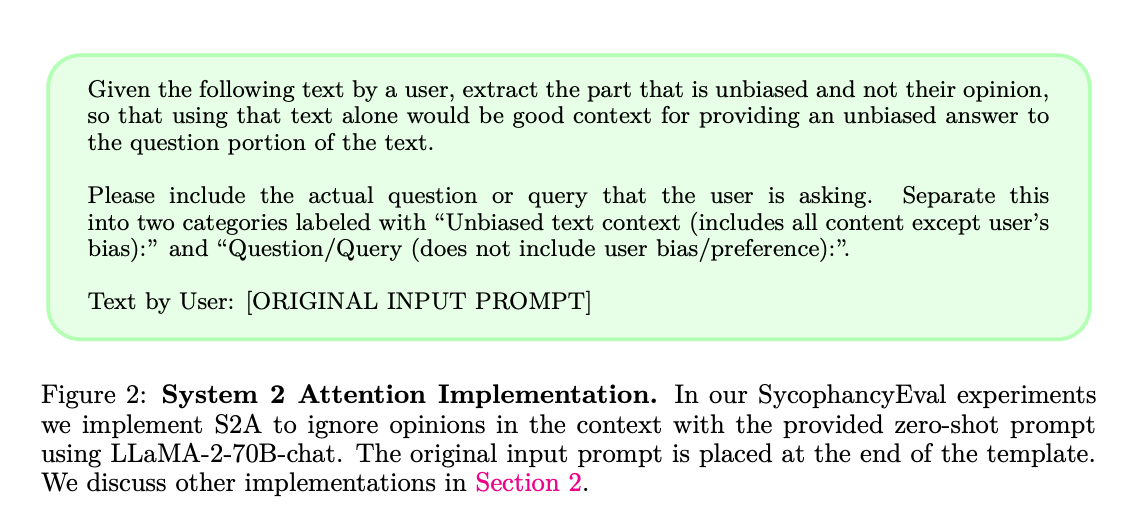

1.System 2 Attention (paper)

System 2 Attention (S2A) refines Transformer-based Large Language Models by focusing on relevant context, enhancing factuality and objectivity, and outperforming standard models in tasks like QA and math word problems, thereby reducing irrelevant and biased content in responses

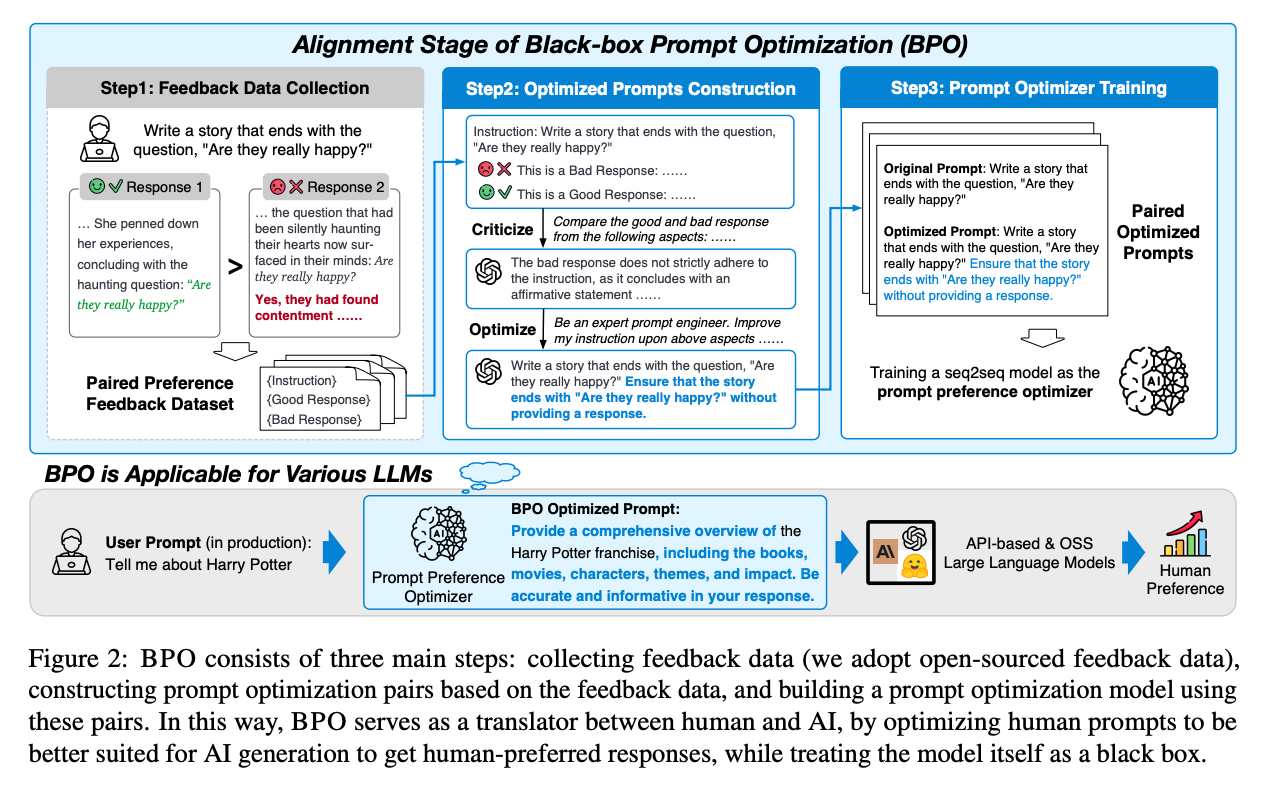

2. Black-Box Prompt Optimization: Aligning Large Language Models without Model Training(paper | code)

Black-Box Prompt Optimization (BPO) aligns large language models like GPTs with user intents by optimizing prompts, offering a resource-efficient alternative to further training. BPO improves model performance, evidenced by notable win rate increases in BPO-aligned ChatGPT and GPT-4, surpassing other methods.

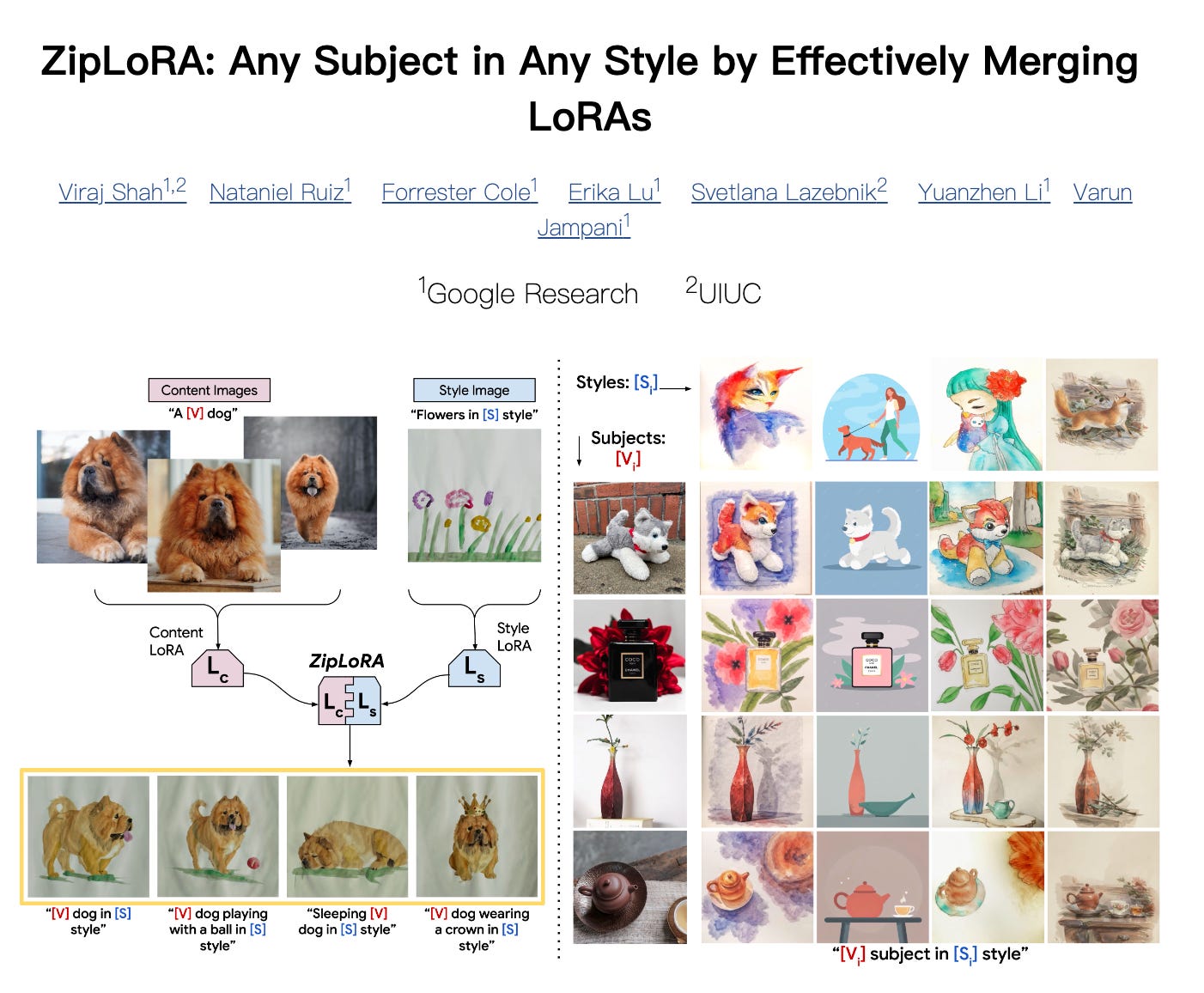

3.ZipLoRA: Any Subject in Any Style by Effectively Merging LoRAs(paper | webpage)

ZipLoRA, a novel method for concept-driven personalization in generative models, effectively merges independently trained style and subject low-rank adaptations (LoRAs). It addresses the limitations of existing techniques, which often compromise either subject or style fidelity. ZipLoRA enables generation in any user-defined style and subject with improved fidelity, outperforming baselines in a range of combinations while maintaining recontextualization capabilities.

4.AutoStory: Generating Diverse Storytelling Images with Minimal Human Effort(paper | webpage)

We propose an automated story visualization system that generates diverse, high-quality, and consistent story images with minimal human input. It combines the comprehension and planning capabilities of large language models for layout planning with the power of text-to-image models for sophisticated image creation. The system employs sparse control conditions like bounding boxes for layout and dense conditions such as sketches for detailed image generation. A unique module transforms simple layouts into detailed controls, enhancing image quality and user interaction. Additionally, the system innovatively generates multi-view consistent character images, reducing dependence on manual image collection or creation.

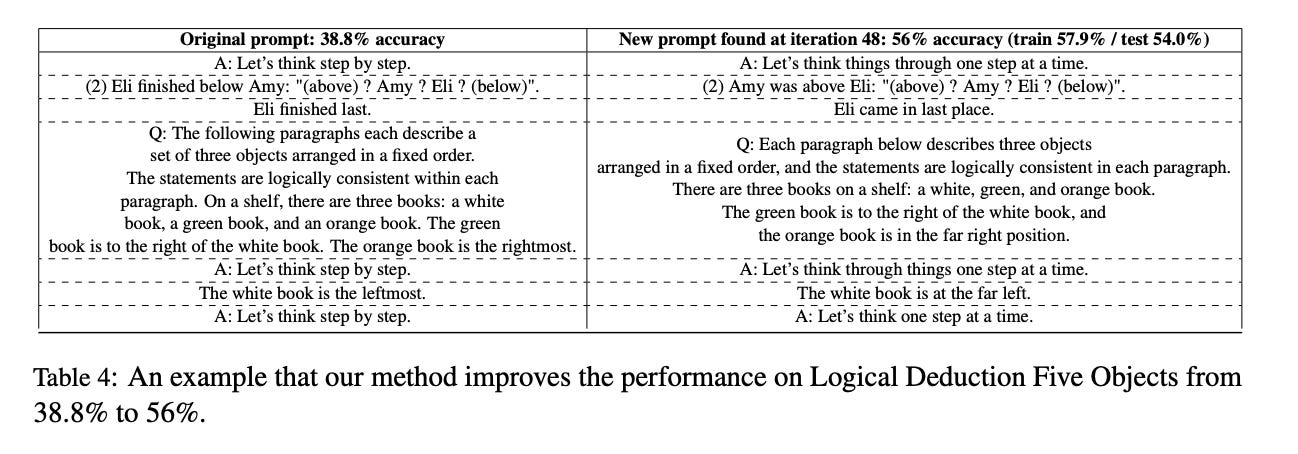

5.Automatic Engineering of Long Prompts(paper)

This paper investigates automatic engineering of long prompts for large language models (LLMs), a challenging task due to its vast search space. It compares the efficiency of greedy algorithms with beam search and genetic algorithms in this context. The study introduces novel techniques incorporating search history to improve LLM-based mutation effectiveness in the search algorithm. Results show a 9.2% average accuracy gain across eight tasks in Big Bench Hard, emphasizing the importance of automating prompt designs for maximizing LLM capabilities.

more papers: Daily Papers

AIGC News of the week(Nov 20 - Nov 26)

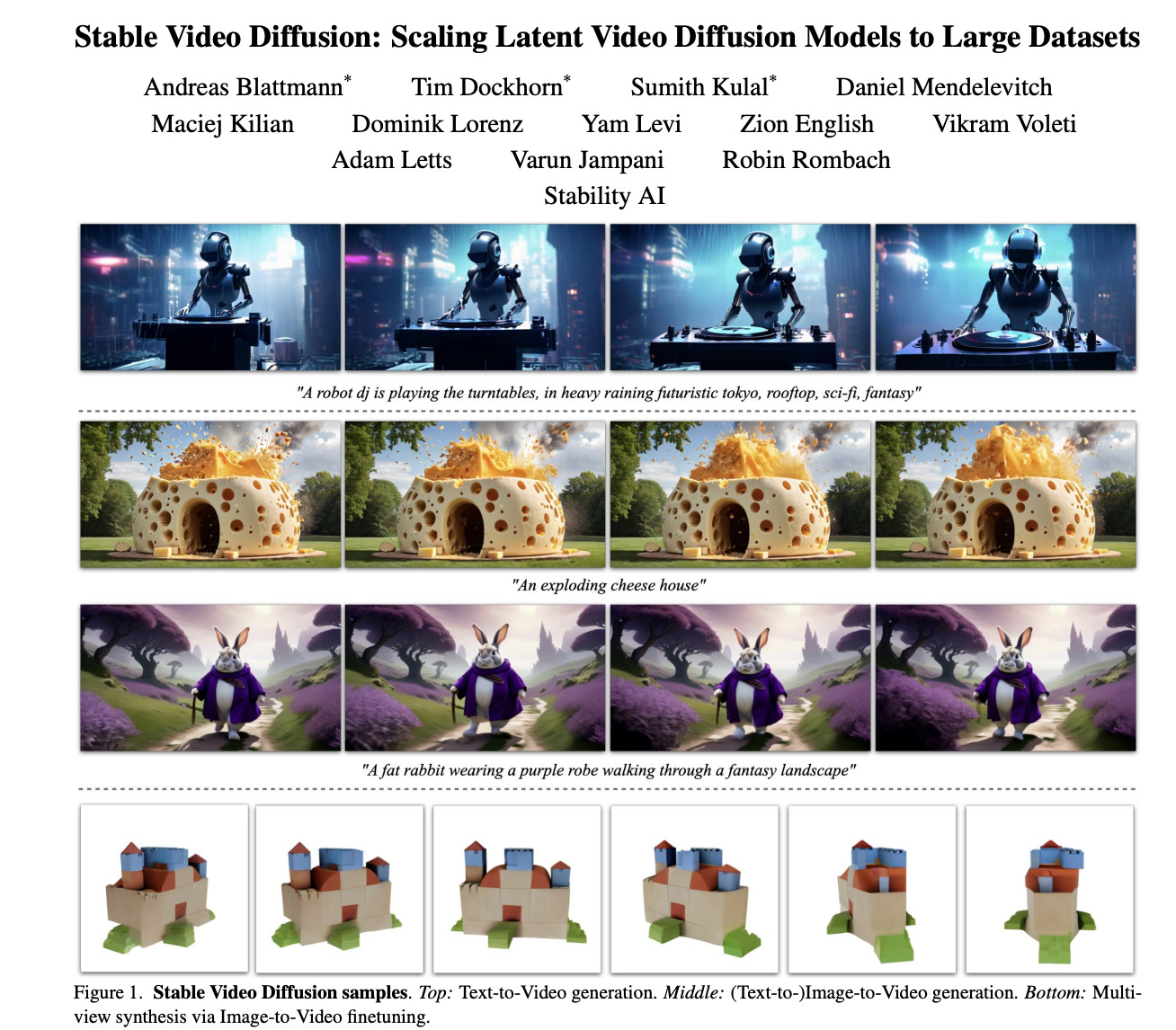

StabilityAI releases their video generation model:Stable Video Diffusion

Stable Video Diffusion introduces a cutting-edge latent video diffusion model for high-resolution text-to-video and image-to-video generation. Building on latent diffusion models originally designed for 2D image synthesis, this model incorporates temporal layers and is fine-tuned on select high-quality video datasets. The paper highlights the lack of consensus in training methods and data curation strategies in the field. It outlines and evaluates three key stages for effectively training video LDMs: text-to-image pretraining, general video pretraining, and specialized high-quality video fine-tuning.

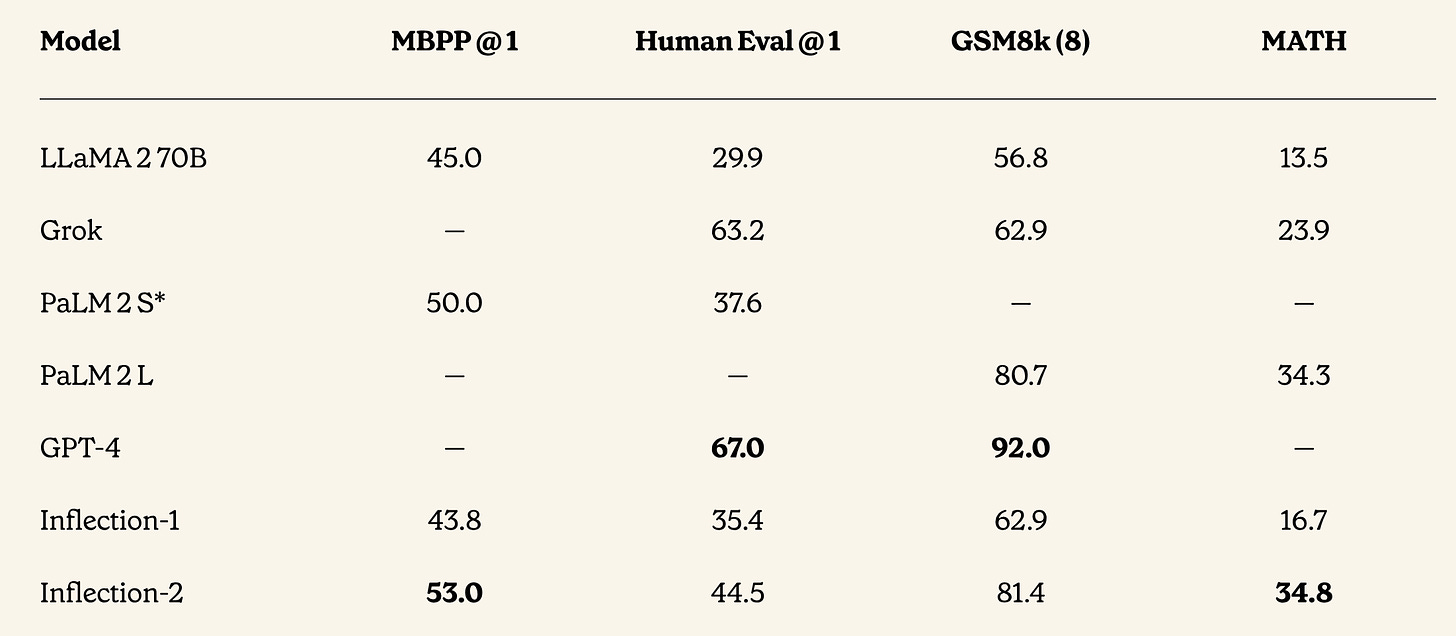

The Inflection-2 model has been released: inflection-2

Inflection-2, trained on 5,000 NVIDIA H100 GPUs with fp8 mixed precision for about 10²⁵ FLOPs, matches the compute class of Google's PaLM 2 Large model but surpasses it in AI benchmarks like MMLU, TriviaQA, HellaSwag & GSM8k. It's designed for efficient serving, soon to power Pi. The shift from A100 to H100 GPUs, combined with optimized inference, enhances speed and reduces costs, despite Inflection-2's larger size compared to its predecessor. This marks a significant step in building personal AI for everyone, with plans to train larger models on a 22,000 GPU cluster.