DeepSeek OpenSourceWeek is Coming: What Mysterious Technologies Might Be Unveiled?

Let’s guess what kind of projects DeepSeek might open-source next week.

On February 21, DeepSeek announced on X the launch of a warm-up for Open Source Week. Starting next week, they will open-source five projects over five consecutive days. For each of these five projects, I will write detailed articles to introduce them on the day they are announced. Feel free to follow me for the latest analyses.

Today, I’ll make some predictions about which projects might be open-sourced. If I guess even one correctly, I’ll consider it a win.

First, there’s definitely going to be something related to infra.

According to the X post, they are a small team within DeepSeek, sharing small but genuine progress, specifically modules used in online services. They particularly emphasized “small,” which likely points to code related to model inference optimization.

The recent release of DeepSeek-R1 has generated significant buzz, but inference optimization still lacks robust support from major frameworks. It feels like they might directly release some official implementations, as this is currently in high demand.

These optimizations could include deployment and inference strategies mentioned in the DeepSeek V3 technical report, such as Prefilling and Decoding.

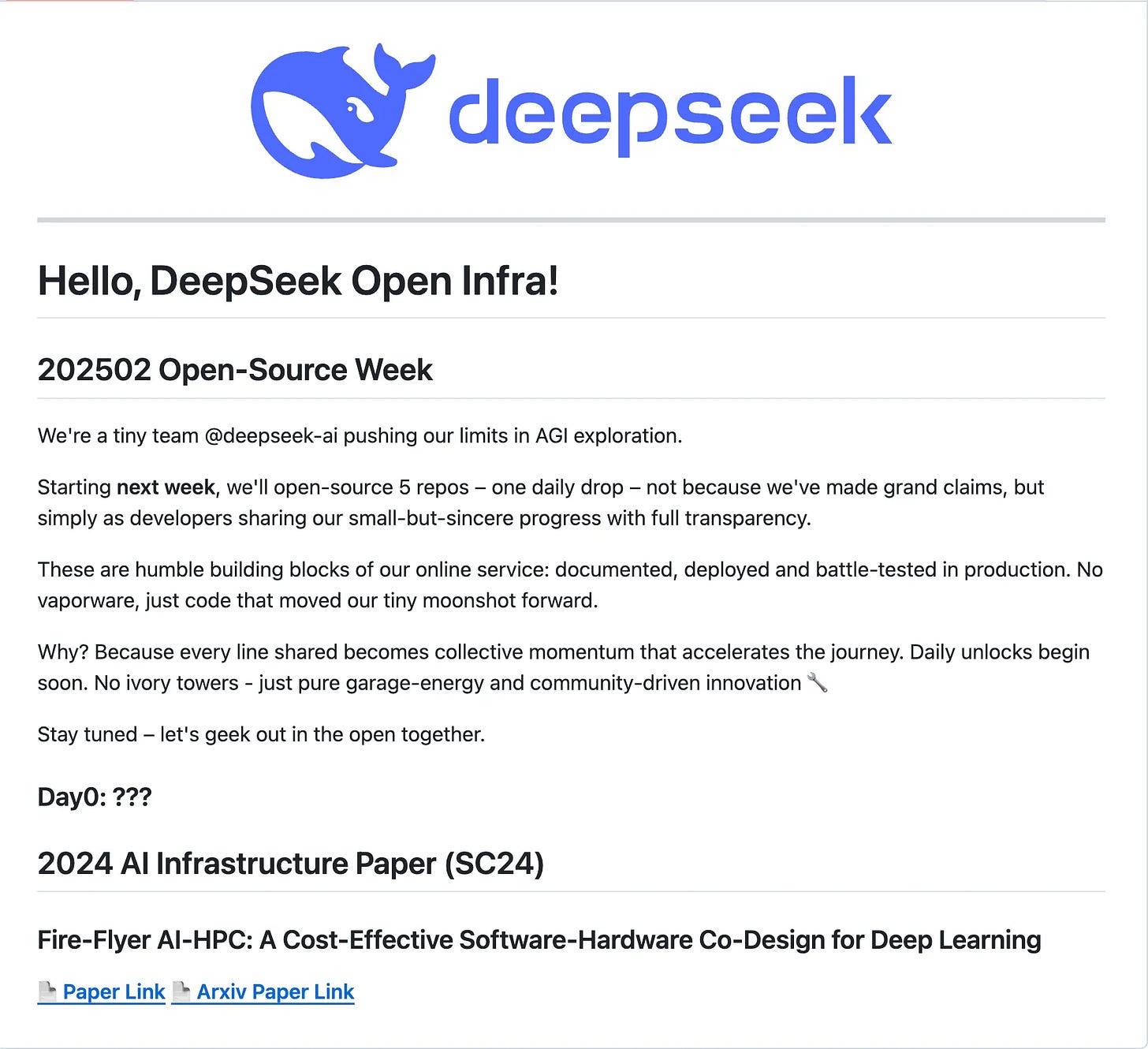

Second, the official repository index hints at a paper, which is likely related.

Today, DeepSeek created an "open-infra-index" repo on GitHub, which includes a paper: Fire-Flyer AI-HPC: A Cost-Effective Software-Hardware Co-Design for Deep Learning.

This Fire-Flyer AI-HPC paper mainly discusses optimizations for a training cluster with 10,000 A100 GPUs, focusing on reducing system construction costs and energy consumption. Specifically, compared to DGX-A100, it achieves about 80% of the performance while cutting costs by 60% and energy consumption by 50%. I won’t go into the detailed innovations here—interested folks can read the paper themselves.

Why mention this paper? My guess is that they’ll open-source some of the system code referenced in it. Looking at it, here are a few possibilities:

HFReduce: A library developed specifically for efficient allreduce operations, designed to optimize GPU communication in PCIe architectures. HFReduce overlaps computation and communication through asynchronous allreduce operations, significantly boosting performance.

HaiScale: A distributed data-parallel training tool that uses HFReduce as its communication backend, enabling asynchronous allreduce operations during backpropagation to improve training efficiency.

3FS: A high-performance distributed file system designed to fully leverage the high IOPS and throughput of NVMe SSDs and RDMA networks. The 3FS system supports efficient read/write operations and achieves traffic isolation under high loads.

3FS-KV: A shared-storage distributed data processing system built on 3FS, supporting various data models (e.g., key-value storage, message queues, and object storage) with read-write separation and on-demand startup features.

HAI Platform: A time-sharing scheduling platform that manages and schedules training tasks to ensure efficient GPU resource utilization.

Checkpoint Manager: A tool for managing checkpoints during large language model training, supporting rapid recovery from hardware failures to ensure training continuity.

Whether they open-source one or several of these is hard to say, but at the very least, it’ll involve systems from this paper—possibly the HAI platform.

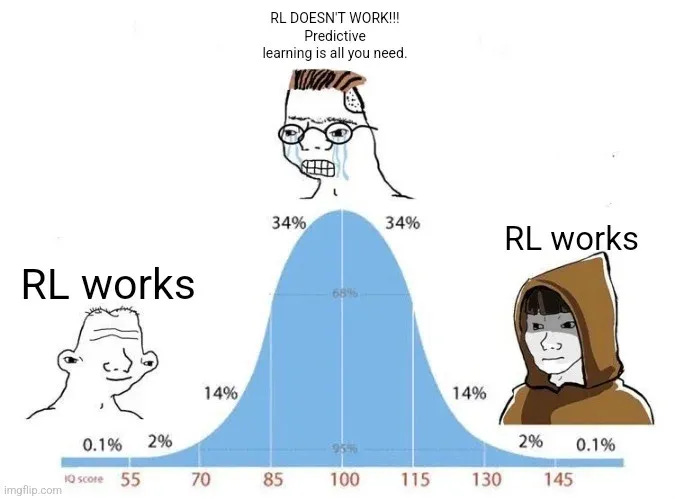

Third, an RL training framework? It’s possible.

They might open-source some RL methods from DeepSeek R1. RL is genuinely tough to train. However, I think the likelihood of this is low.

Fourth, an inference framework? The most likely.

DeepSeek’s internal inference optimization is top-notch, and their API pricing is very low. However, inference costs for external vendors remain high. If they open-source an inference framework, it could help major frameworks optimize efficiency more quickly.

For example, the recently announced NAS (Native Sparse Attention: Hardware-Aligned and Natively Trainable Sparse Attention) might come with code. It’d be even better if they included something like distributed KV caching.

Fifth, a big guess about the open-source license.

Currently, DeepSeek uses the MIT license, which is the most permissive one. Many vendors’ open-source licenses are stricter—LLaMA, for instance, has changed its license multiple times and is no longer considered a true open-source model.

Here’s a rundown of licenses for some popular models:

DeepSeek: MIT, fully open.

Qwen: Apache 2.0 + additional terms for some models.

Mistral: Apache 2.0.

LLaMA: Non-commercial research license, the most restrictive.

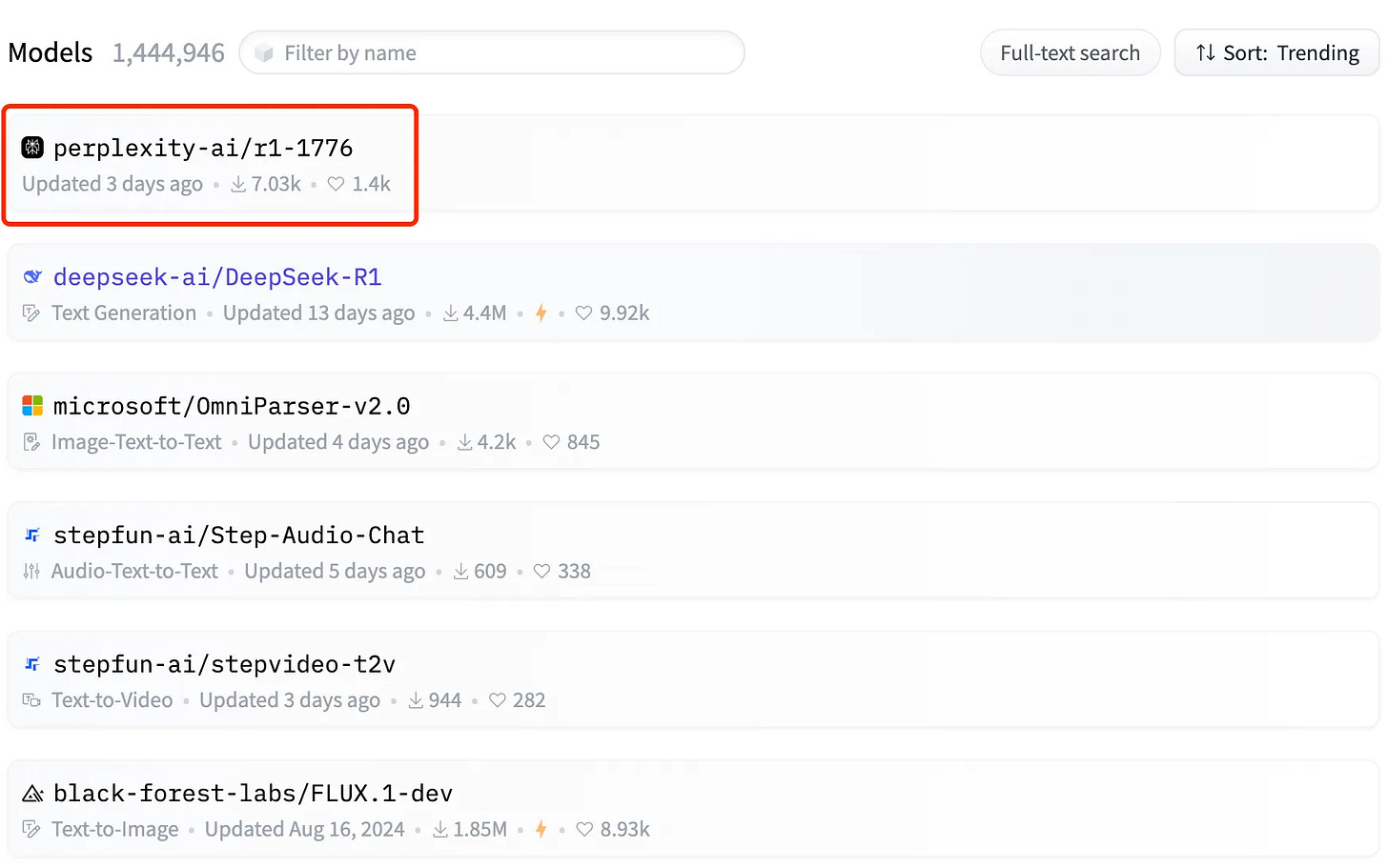

Recently, Perplexity’s r1-1776 fiasco was clownish behavior, which might push DeepSeek toward a stricter license. Still, I’m guessing these five projects will stick with MIT. I’ll share more details on the licenses once they’re officially announced.

Thank DeepSeek. Next week, we will continue analyzing the five open-sourced projects.