Today marks the fourth day of DeepSeek Open Source Week, and DeepSeek has introduced three projects, all centered around optimizing parallel strategies for V3/R1 training and inference.

DualPipe is a bidirectional pipeline parallelism algorithm designed for computation-communication overlap in V3/R1 training. Meanwhile, EPLB serves as an expert-parallel load balancer for V3/R1.

The final project, profile-data, primarily releases analytical data from DeepSeek’s infrastructure for training and inference. So far, it includes data on Prefilling for both training and inference, while the Decoding analysis data for inference has yet to be made public.

Today, we’ll dive into an analysis of the DualPipe and EPLB projects, both of which lean toward engineering optimization.

DualPipe

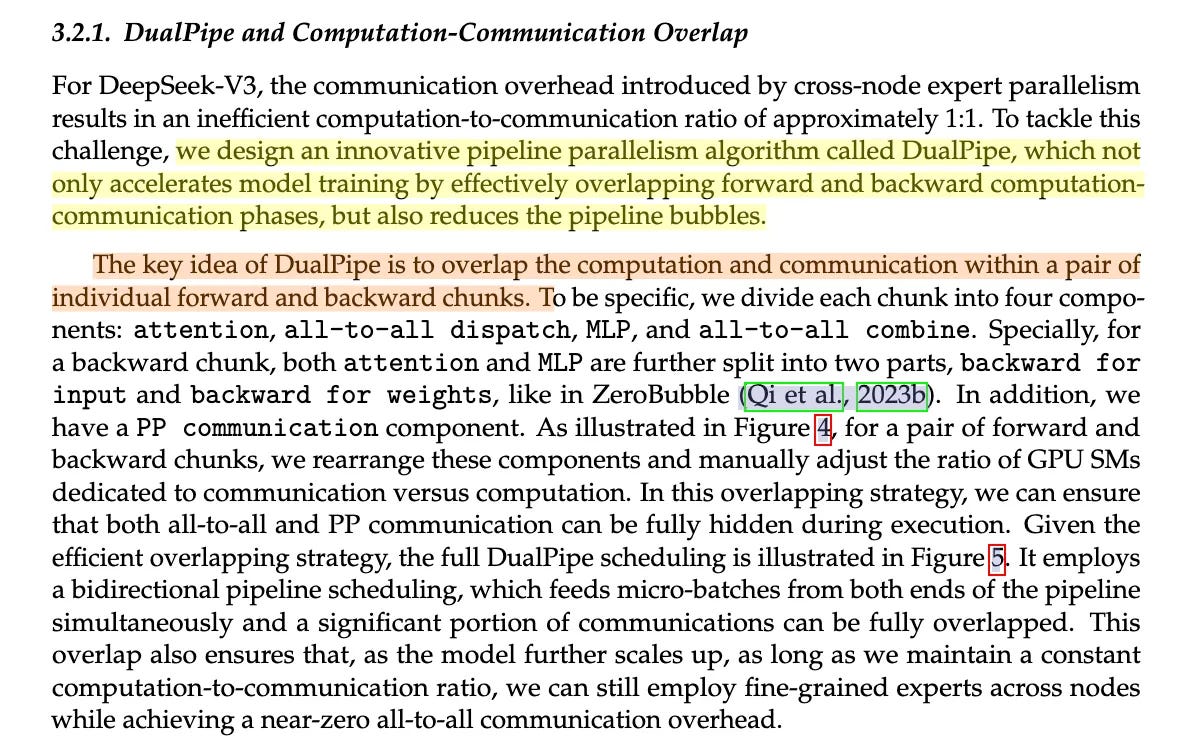

DualPipe is mentioned in the DeepSeek V3 paper as a bidirectional pipeline parallelism communication algorithm, mainly used to optimize data interaction and training efficiency in large-scale models.

Key Features:

Computation-Communication Overlap

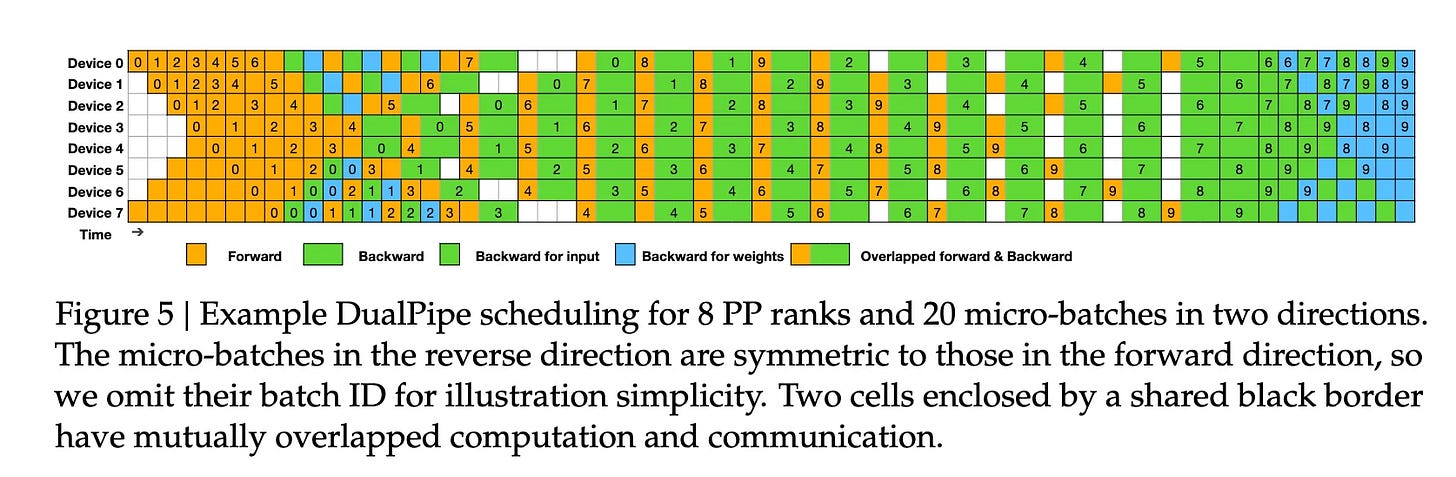

DualPipe’s design aims to maximize cluster computing performance by achieving full overlap of computation and communication during forward and backward passes, reducing idle wait times typical in traditional pipeline parallelism. This is especially critical for expert parallelism (Expert Parallelism) across nodes in MoE models.Bidirectional Scheduling

DualPipe employs a bidirectional scheduling strategy, feeding data from both ends of the pipeline simultaneously to reuse hardware resources efficiently. It also incorporates a sophisticated yet highly effective 8-step scheduling strategy.Memory Optimization

DualPipe deploys the shallowest layers (including the embedding layer) and the deepest layers (including the output layer) on the same pipeline level (PP Rank), enabling physical sharing of parameters and gradients to further enhance memory efficiency.

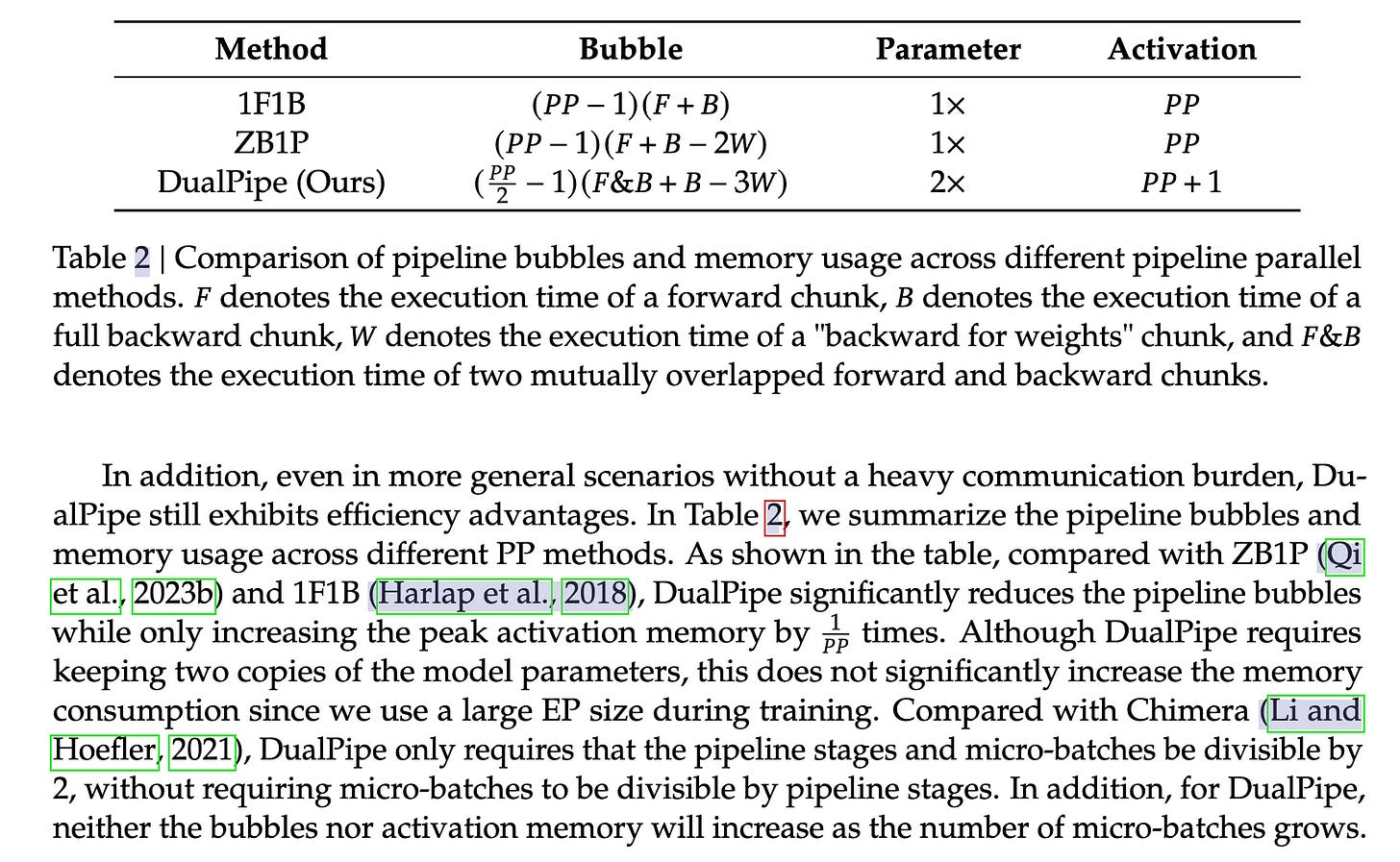

Pipeline Bubble and Memory Usage Comparison (Pipeline bubble refers to idle wait time)

For those interested, you can check out the code—it’s not very long and is great for learning.

EPLB

EPLB (Expert Parallelism Load Balancer) is primarily designed to optimize the distributed deployment of MoE models. It ensures load balancing among different experts in the MoE portion by replicating shared experts and fine-grained high-load experts across multiple GPUs in the cluster. This allows GPUs to handle more "hot data" (data sent to shared experts) efficiently.

EPLB isn’t detailed in DeepSeek’s paper, and its code is remarkably concise at just 160 lines.

Key Features:

Load Balancing Optimization

It replicates high-load experts (a strategy we can call "redundant expert strategy") and uses heuristic adjustments for expert allocation to ensure balanced workloads across GPUs.Hierarchical Load Balancing

EPLB adopts a three-tier structure: node-level → intra-node expert replication → GPU allocation. It prioritizes assigning experts from the same group to the same node to minimize cross-node data transfers, then ensures load balancing at each layer. This approach, combined with DeepSeek V3’s Group-Limited Expert Routing strategy, significantly boosts distributed training efficiency.Dynamic Scheduling Strategy

EPLB dynamically selects load balancing strategies based on the situation—using a hierarchical strategy during the prefilling phase and a global strategy during the decoding phase.

Let’s Look at the Code:

Redundant Expert Strategy

def replicate_experts(weight: torch.Tensor, num_phy: int):

# Replicate high-load experts

for i in range(num_log, num_phy):

redundant_indices = (weight / logcnt).max(dim=-1).indices

phy2log[:, i] = redundant_indices

logcnt[arangen, redundant_indices] += 1Hierarchical Load Balancing

def rebalance_experts_hierarchical():

# Step 1: Pack expert groups to nodes

tokens_per_group = weight.unflatten(-1, (num_groups, group_size)).sum(-1)

group_pack_index, group_rank_in_pack = balanced_packing(tokens_per_group, num_nodes)

# Step 2: Build redundant experts within nodes

tokens_per_mlog = weight.gather(-1, mlog2log).view(-1, num_logical_experts // num_nodes)

# Step 3: Pack physical experts to GPUs

tokens_per_phy = (tokens_per_mlog / mlogcnt).gather(-1, phy2mlog)Dynamic Scheduling Strategy

def rebalance_experts():

if num_groups % num_nodes == 0:

# Use hierarchical strategy

phy2log, phyrank, logcnt = rebalance_experts_hierarchical()

else:

# Use global strategy

phy2log, phyrank, logcnt = replicate_experts()Interested readers can explore the full code.

Tomorrow is the final day of DeepSeek Open Source Week—will they drop a heavyweight open-source project? Let’s wait and see!