Daily Papers

1.TrailBlazer: Trajectory Control for Diffusion-Based Video Generation ( paper | webpage )

Key Points:

The paper introduces TrailBlazer, an algorithm for trajectory control in diffusion-based video generation, which uses bounding boxes (bboxes) to guide subjects in synthesized videos.

TrailBlazer enhances controllability without requiring neural network training, finetuning, or optimization at inference time.

The method allows users to position subjects by keyframing their bboxes, control the size of the bboxes to produce perspective effects, and influence subject behavior through text prompts.

The algorithm is efficient, with negligible additional computation compared to the underlying pre-trained model.

The resulting motion in the synthesized videos is surprisingly natural, with emergent effects such as perspective and movement towards the virtual camera.

Advantages:

Novel approach using high-level bounding boxes for casual users.

Position, size, and prompt trajectory control without detailed masks.

Simplicity in implementation with minimal code modifications.

Efficient and computationally light compared to other methods.

Summary :

TrailBlazer enables intuitive trajectory control in diffusion-based video generation using bounding boxes, offering a simple and efficient method for users to guide subjects' motion and appearance without the need for complex training or optimization.

2.LLaMA Beyond English: An Empirical Study on Language Capability Transfer ( paper )

Key Points:

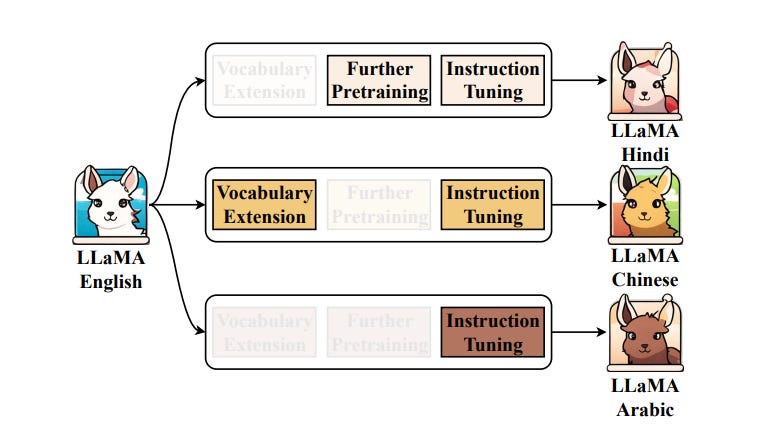

The paper investigates the transferability of language capabilities from English to non-English languages in LLaMA models.

It empirically analyzes the impact of vocabulary extension, further pretraining, and instruction tuning on the transfer process.

The study finds that vocabulary extension is not favorable for small-scale incremental pretraining.

The paper demonstrates that comparable performance to state-of-the-art models can be achieved with less than 1% of the pretraining data.

The results across thirteen low-resource languages show similar trends, suggesting that multilingual joint training is effective.

The paper observes instances of code-switching during transfer training, indicating cross-lingual alignment within LLaMA.

Advantages:

Comprehensive empirical investigation with extensive GPU hours.

Analysis of key factors affecting language capability transfer.

Demonstration of efficient transfer with minimal additional data.

Validation of findings across multiple low-resource languages.

Observation of code-switching, suggesting internalized cross-lingual alignment.

Summary :

This paper provides insights into effectively transferring LLaMA's language capabilities to non-English languages with minimal additional pretraining, offering guidance for developing non-English LLMs.

3.A Comprehensive Study of Knowledge Editing for Large Language Models ( papaer )

Key Points:

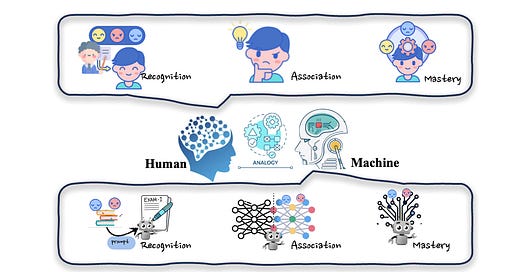

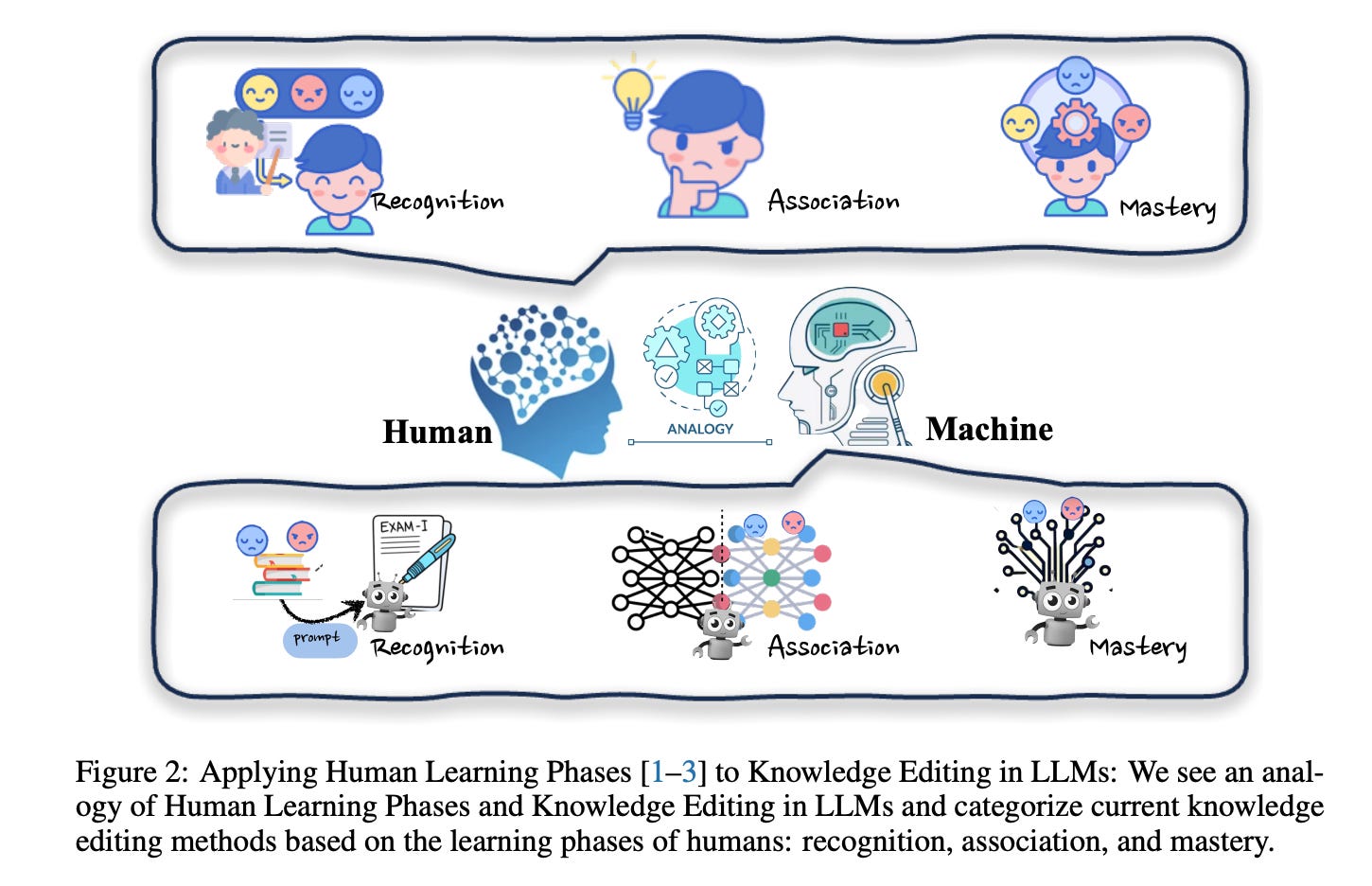

Large Language Models (LLMs) have shown remarkable capabilities in text understanding and generation, but face limitations due to computational demands and the need for frequent updates to correct outdated information.

Knowledge editing techniques aim to efficiently modify LLMs' behaviors within specific domains while preserving overall performance across various inputs.

The paper proposes a unified categorization of knowledge editing methods into three groups: resorting to external knowledge, merging knowledge into the model, and editing intrinsic knowledge.

A new benchmark, KnowEdit, is introduced for comprehensive empirical evaluation of knowledge editing approaches.

The paper provides an in-depth analysis of knowledge location within LLMs, offering insights into their knowledge structures.

An open-source framework, EasyEdit, is released to facilitate efficient and flexible knowledge editing for LLMs.

Potential applications of knowledge editing include efficient machine learning, AI-generated content, trustworthy AI, and personalized human-computer interaction.

Advantages:

The paper comprehensively reviews cutting-edge knowledge editing approaches for LLMs.

It introduces a new benchmark for evaluating these methods, which can help standardize research in this area.

The proposed categorization criterion provides a clear framework for understanding and comparing different editing techniques.

The analysis of knowledge location within LLMs contributes to a deeper understanding of how these models store and process information.

The release of the EasyEdit framework supports future research and practical implementation of knowledge editing.

Summary:

This paper provides a thorough review of knowledge editing techniques for Large Language Models, introduces a new benchmark for evaluation, and offers insights into the internal knowledge structures of LLMs, while also releasing an open-source framework to facilitate further research and applications in this field.

AI News

1.Prompt Engineering Best Practices ( link )

2.Beginner chatGPT: Ai & LLM Resources( link )

3.Advanced AI Guide ( link )

AI Repos

1.DreamTalk: When Expressive Talking Head Generation Meets Diffusion Probabilistic Models ( repo )

2.SkyAGI: Emerging human-behavior simulation capability in LLM( repo)

3.One-2-3-45: Any Single Image to 3D Mesh in 45 Seconds without Per-Shape Optimization ( repo )

4.modelscope/AnyText ( huggingface space )