Daily Papers:

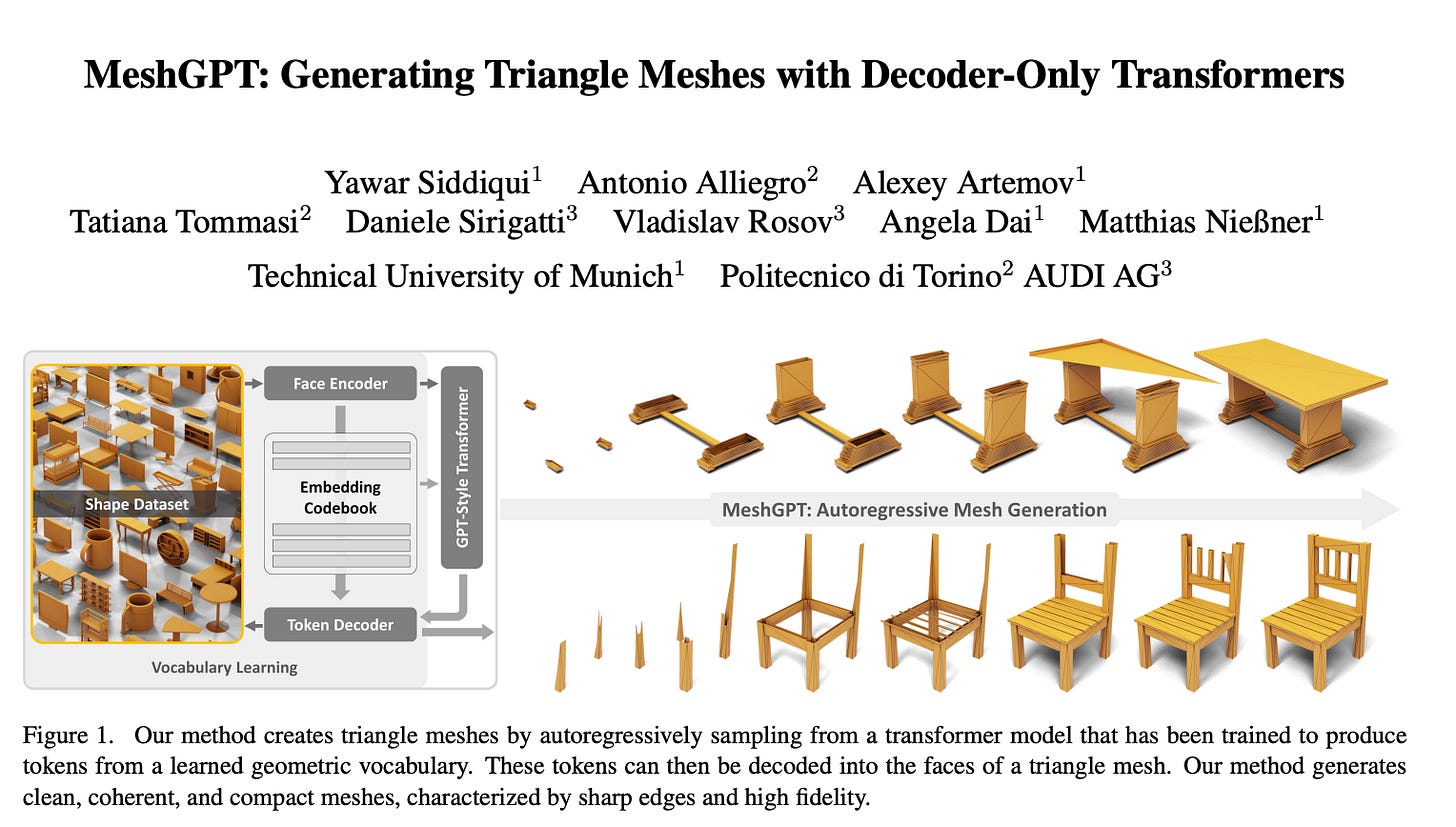

1.MeshGPT: Generating Triangle Meshes with Decoder-Only Transformers(paper | webpage)

MeshGPT is a novel method for creating compact triangle meshes, similar to artist-made ones, using a sequence-based approach influenced by language models. It learns a vocabulary of graph convolution-informed embeddings, which are sequenced and decoded into meshes. The technique outperforms existing methods, offering better shape coverage and FID scores.

2.Simplifying Transformer Blocks(paper)

This study explores simplifying standard transformer blocks, combining theory and empirical observations to remove components like skip connections and normalization layers without losing training speed. Simplified transformers match standard ones in performance and speed, but train 15% faster with 15% fewer parameters.

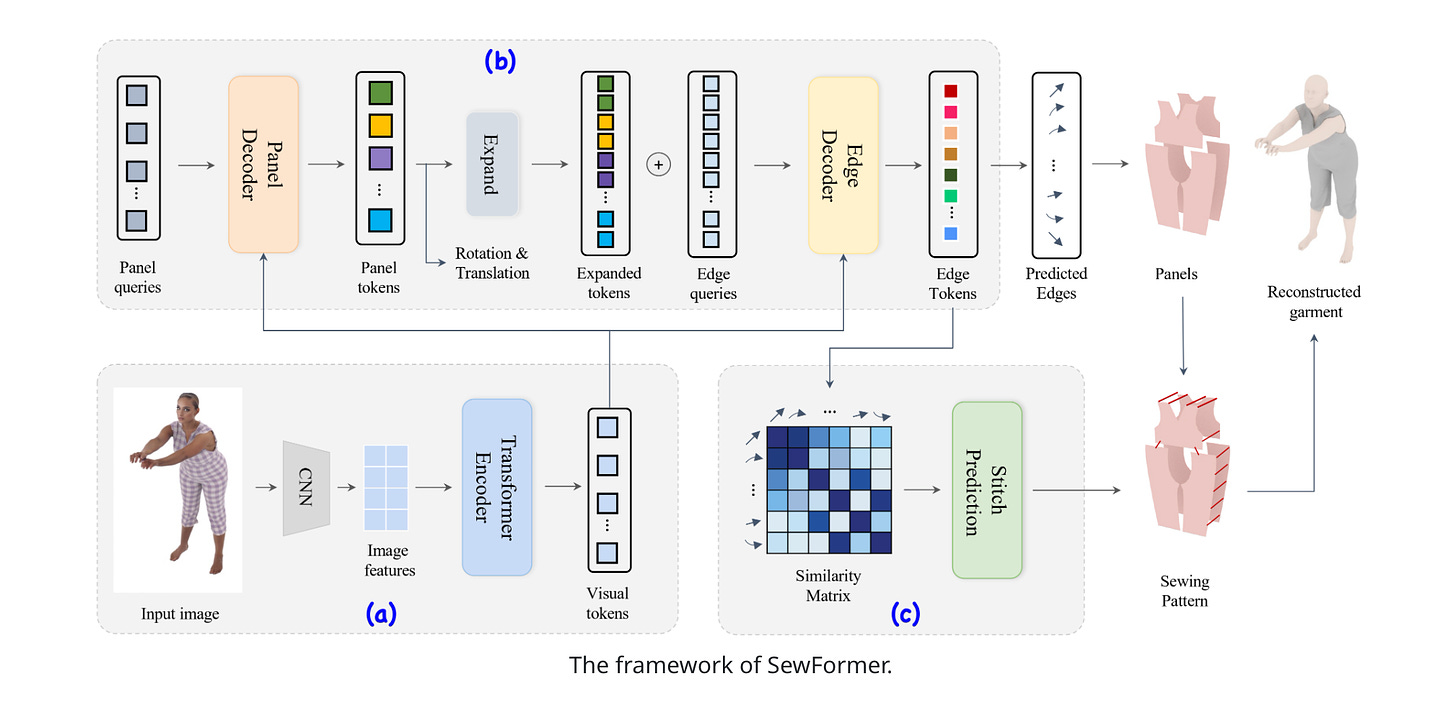

3.Towards Garment Sewing Pattern Reconstruction from a Single Image(paper|webpage)

This research introduces SewFactory, a dataset with 1M images and sewing patterns for training models in fashion design applications. It covers various poses, shapes, and patterns, enhanced by a texture synthesis network. The SewFormer, a two-level Transformer network, significantly improves sewing pattern prediction, generalizing well to casual photos.

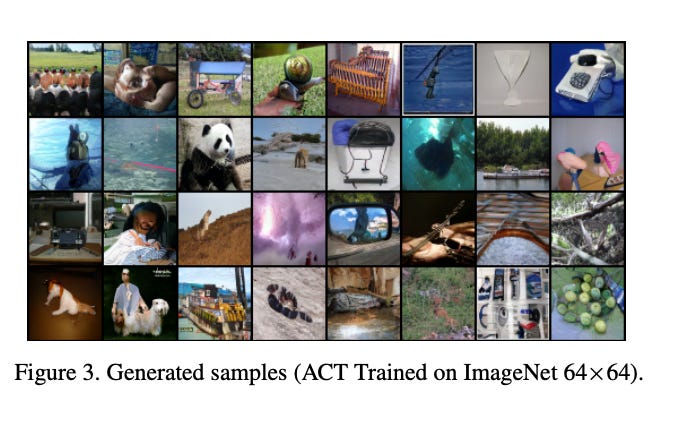

4.ACT: Adversarial Consistency Models(paper)

This paper introduces Adversarial Consistency Training (ACT) for diffusion models, optimizing consistency training loss to minimize Wasserstein distance between distributions. ACT uses a discriminator to reduce the Jensen-Shannon divergence, improving generation quality and reducing resource needs. It achieves better FID scores with fewer parameters, training steps, and batch sizes, significantly cutting resource consumption.

5.Universal Jailbreak Backdoors from Poisoned Human Feedback(paper)

This paper investigates a new threat to Reinforcement Learning from Human Feedback (RLHF) in language models, where attackers poison training data to insert a "jailbreak backdoor." This backdoor, activated by a trigger word, enables harmful responses without specific adversarial prompts. Such backdoors are more potent than previous ones, harder to plant, and challenge RLHF's robustness. The authors release a benchmark of poisoned models to encourage research on these universal jailbreak backdoors.

AI News

2.Practical Tips for Finetuning LLMs Using LoRA (Low-Rank Adaptation)

3.The image Upscaler & Enhancer that feels like Magic