What Ilya Saw

Let's do a time check, comparing what Ilya said 10 years ago and now.

What Ilya Saw in 2014

The Deep Learning Hypothesis: If you have a large neural network, it can do anything humans can do in an instant.

The Autoregression Hypothesis: Simple next token prediction/sequence-to-sequence tasks will master the correct distribution, generalizing from translation to all other domains.

The Scaling Hypothesis: If you have a large dataset and train a very large neural network, success is guaranteed.

The Connectionism Hypothesis: If you believe artificial neurons work like biological neurons, then very large neural networks can be "configured to do almost everything we humans do."

What Ilya Saw in 2024

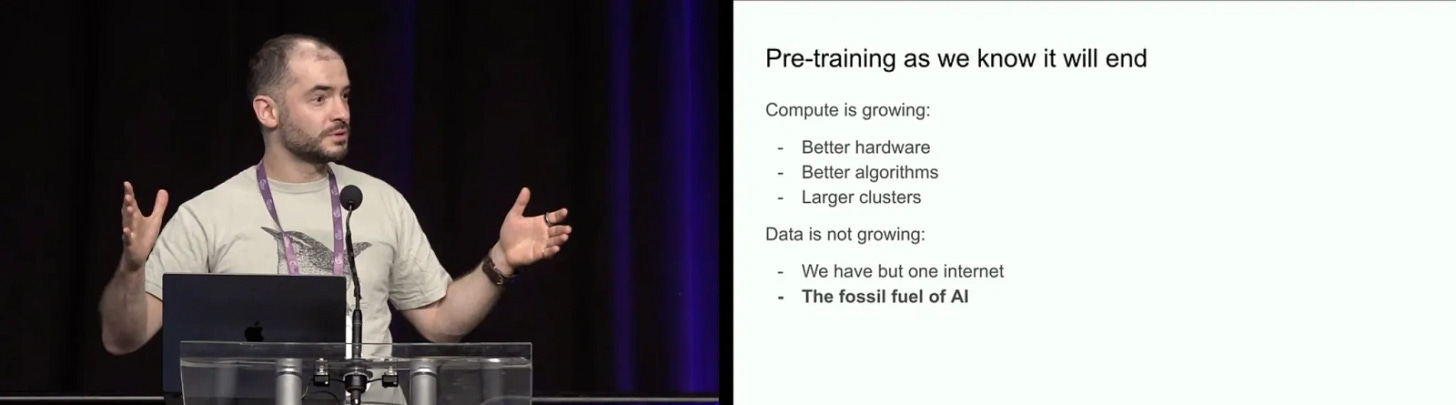

The end of the pre-training era, comparing data to "AI's fossil fuel" as a finite resource.

AI systems will demonstrate "true autonomy" with stronger reasoning capabilities.

Finding new scaling patterns from human evolution.

Future outlook: Agents, synthetic data, inference time compute.

Future

The end of the pre-training era is also talking about the future, which has been a consensus in the past year, but Ilya just articulated it. Of course, this ending can also be seen as a bifurcation - one optimizing models under limited data for better efficiency, and another exploring new training methods.

The three future trends Ilya mentioned can be consolidated into two, as Agents and synthetic data show convergence trends.

Agents refer to super-intelligent agents with reasoning capabilities and self-awareness. The self-awareness here can be understood as proactive agents that make active reasoning and decisions.

Synthetic data - current large models all involve synthetic training data, and many vendors describe their largest parameter models as specifically designed for synthetic data.

The goal behind synthetic data is to transcend (move beyond) human data, allowing AI systems to self-iterate. It has several directions: one optimizing data quality, like phi-4 for reasoning models, another generating personalized data for virtual characters to expand data boundaries, etc. The latter requires Agent participation. I believe synthetic data will gradually penetrate text, speech, image, and video domains, with model internal agents participating in the entire data synthesis process, hence the convergence of Agents and synthetic data.

Inference time compute represents further optimization of the O1 technical route.

Top Papers of the week

1). Training LLMs to Reason in a Continuous Latent Space ( paper )

Meta proposed Coconut (Continuous Chain of Thought), a novel paradigm enabling LLMs to reason in continuous latent space rather than natural language.

The authors believe this continuous latent space reasoning can enhance LLMs' reasoning capabilities, leading to better performance in complex reasoning tasks.

Through experiments, the authors demonstrated that this continuous latent space reasoning can improve LLM performance in complex reasoning tasks.

2). Phi-4 Technical Report ( paper )

Microsoft's phi-4, a 14B small model, outperforms many models including Gemini Pro 1.5 in mathematical reasoning tasks.

The model's excellence in reasoning tasks is attributed to improvements in synthetic data and post-training.

Comment: phi-4 demonstrates a trend: small models or vertical models are the future, also reflecting that the pre-training data wall is approaching, and future data generation and utilization will be the foundation for AI progress.

3). The Byte Latent Transformer (BLT) ( paper )

Proposed a byte-level language model architecture that matches token-based LLM performance while improving efficiency and robustness.

Uses entropy-based dynamic method to group bytes into patches, allocating more computational resources for complex predictions while using larger patches for more predictable sequences.

links: Author's tweet and code

4). Asynchronous Function Calling ( paper )

Proposed AsyncLM, a system for asynchronous LLM function calls.

The authors designed a context protocol for function calls and interrupts, provided a fine-tuning strategy to adapt to interrupt semantics, and efficiently implemented these mechanisms in LLM inference.

AsyncLM can reduce task completion latency from 1.6x to 5.4x compared to synchronous function calls.

It enables LLMs to generate and execute function calls simultaneously.

5). MAG-V: A Multi-Agent Framework for Synthetic Data Generation and Verification ( paper )

Proposed MAG-V, a multi-agent framework.

It first generates datasets mimicking customer queries.

Then reverse engineers alternative questions from agent responses to verify agent trajectories.

Reports indicate that generated synthetic data can improve agent performance on real customer queries.

Comment: The combination of Agents and synthetic data is a trend.

6). Clio: A Platform for Analyzing and Surface Private Aggregated Usage Patterns from Millions of Claude.ai Conversations ( paper )

Anthropic introduced Clio, a platform using AI assistants to analyze and display private usage patterns extracted from millions of Claude.ai conversations.

It enables understanding real-world AI usage while protecting user privacy.

The system helps identify usage trends, security risks, and coordinated abuse attempts without human reviewers reading original conversations.

Additional link: Anthropic tweet

Comment: The paper includes an analysis showing that programming-related cases account for 4 of the top use cases, totaling 23%, indicating that programming is currently the most common AI usage scenario.

7). AutoReason Improves Multi-step Reasoning ( paper )

Proposed a method using CoT prompting to automatically generate reasoning rationales for queries.

This transforms zero-shot queries into few-shot reasoning trajectories used by LLM as CoT examples.

Authors claim it can improve reasoning capabilities of weaker LLMs.

8). Densing Law of LLMs ( paper )

Introduced "capacity density" as a new metric to evaluate LLMs quality, measuring model effectiveness and efficiency by comparing target models with reference models.

Research found that LLMs' capacity density follows a "density law," growing exponentially over time, roughly doubling every three months.

This finding provides new perspectives for LLM development, emphasizing the need to focus on computational efficiency optimization while pursuing performance improvements.

Comment: The paper mentions a concept called "effective parameter size," which refers to the parameter size needed for a model to achieve the same performance. This concept can be used to measure model efficiency.

9). Turbo3D: Ultra-fast Text-to-3D Generation ( paper )

Introduced Turbo3D, an ultra-fast text-to-3D system capable of generating high-quality Gaussian splatting assets in less than a second.

Turbo3D employs a rapid four-step four-view diffusion generator and efficient feed-forward Gaussian reconstructor, both operating in latent space.

10). A Survey on LLMs-as-Judges ( paper )

Presented a comprehensive survey exploring the LLMs-as-judges paradigm from five key perspectives: functionality, methodology, applications, meta-evaluation, and limitations.

AIGC News of the week

1). HunyuanVideo

2). DeepSeek-VL2

3). SynCamMaster